Image Credit: https://www.inovex.de/

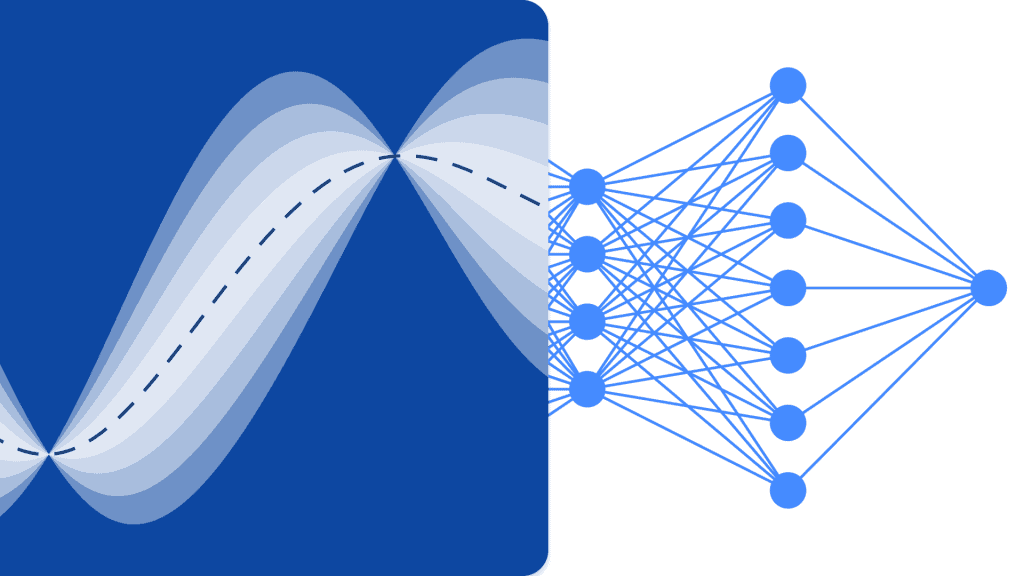

What is Deep Learning? It is a branch of Machine Learning which uses a simulation of the human brain which is known as neural networks. These neural networks are made up of neurons that are similar to the fundamental unit of the human brain. The neurons make up a neural network model and this field of study altogether is named deep learning.

The end result of a neural network is called a deep learning model. Mostly, in deep learning, unstructured data is used from which the deep learning model extracts features on its own by repeated training on the data. Such models that are designed for one particular set of data when available for use as the starting point for developing another model with a different set of data and features, is known as Transfer Learning. In simple terms, Transfer Learning is a popular method where one model developed for a particular task is again used as the starting point to develop a model for another task.

Transfer Learning

Transfer Learning has been utilized by humans since time immemorial. Though this field of transfer learning is relatively new to machine learning, humans have used this inherently in almost every situation. We always try to apply the knowledge gained from our past experiences when we face a new problem or task and this is the basis of transfer learning. For instance, if we know to ride a bicycle and when asked to ride a motorbike which we haven’t done before, our experience with riding a bicycle will always be applied when riding the motorbike such as steering the handle and balancing the bike. This simple concept forms the base of Transfer Learning.

To understand the basic notion of Transfer Learning, consider a model X is successfully trained to perform task A with model M1. If the size of the dataset for task B is too small preventing the model Y from training efficiently or causing overfitting of the data, we can use a part of model M1 as the base to build model Y to perform task B.

Why Transfer Learning?

According to Andrew Ng, one of the pioneers of today’s world in promoting Artificial Intelligence, “Transfer Learning will be the next driver of ML success”. He mentioned it in a talk given at Conference on Neural Information Processing Systems (NIPS 2016). It is no doubt that the success of ML in today’s industry is primarily due to supervised learning. On the other hand, going forward, with more amount of unsupervised and unlabeled data, transfer learning will be one technique that will be heavily utilized in the industry.

Nowadays, people prefer using a pre-trained model that is already trained on a variety of images such as ImageNet than building a whole Convolutional Neural Network model from scratch. Transfer learning has several benefits, but the main advantages are saving training time, better performance of neural networks and not needing a lot of data.

Methods of Transfer Learning

Generally, there are two ways of applying transfer learning – One is developing a model from scratch and the other is to use a pre-trained model.

In the first case, we usually build a model architecture depending upon the training data and the ability of the model to extract weights and patterns from the model is studied carefully with several statistical parameters. After a few rounds of training, depending upon the result, some changes may be required to be made to the model to achieve optimal performance. In this way, we can save the model and use it as a starting to build another model for a similar task.

The second case of using pre-trained models is usually most commonly referred to as Transfer Learning. In this, we have to look up for pre-trained models that are shared by several research institutions and organizations released periodically for general use. These models are available for download on the internet along with their weights and can be used to build models for similar datasets.

Transfer Learning Implementation – VGG16 Model

Let us go through an application of Transfer Learning by utilizing a pre-trained model called as VGG16.

The VGG16 is a Convolutional Neural Network model that was released by the Professors of the University of Oxford in the year 2014. It was one of the famous models that won the ILSVR (ImageNet) Competition that year. It is still acknowledged as one of the best vision model architectures. It has 16 weight layers including 13 convolutional layers, 3 fully connected layers and a soft max layer. It has approximately 138 million parameters. Given below is the Architecture of the VGG16 Model.

Image Source

Step 1: The first step is to import the VGG16 model that is provided by the keras library in the TensorFlow framework.

Step 2: In the next step, we shall assign the model to a variable “vgg” and download the weights of the ImageNet by giving it as an argument to the model

Step 3: As these pre-trained models such as VGG16, ResNet have been trained on several thousands of images and are used to classify several classes, we do not need to train the layers of the pre-trained model once again. Hence, we set all the layers of the VGG16 model as “False”.

Step 4: As we have frozen all the layers and removed the last classification layers of the pre-trained VGG16 model, we need to add a classification layer on top of the pre-trained model to train it on a dataset. Hence, we flatten the layers and introduce a final Dense layer with softmax as the activation function with an example of binary class prediction model.

Step 5: In this final step, we print the summary of our model to visualize the layers of the pre-trained VGG16 model and the two layers that we added on top of it utilizing Transfer Learning.

From the above summary, we can see that there are close to 14.76M total parameters of which only about 50,000 parameters belonging to the last two layers are allowed to be used for training purposes due to the condition set above in Step 3. The remaining 14.71M parameters are referred to as non-trainable parameters.

Once these steps are performed, we can perform steps to train the regular Convolutional Neural Network by compiling our model with external hyperparameters such as optimizer and loss function. After compiling, we can begin the training using the fit function for a set number of epochs. In this way, we can utilize the method of transfer learning to train any dataset with several such pre-trained models on the net and adding a few layers on top of the model according to the number of classes of our training data.

Conclusion

In this article, we have gone through the basic understanding of Transfer Learning, its application and also its implementation with a sample pre-trained VGG16 Model from the keras library. In addition to this, it has been found out that using the pre-trained weights only from the last two layers of the network has the biggest effect on convergence. This also results in faster convergence due to repeated usage of features. Transfer Learning has a lot of applications in building models today. Most importantly, AI for healthcare applications need several such pre-trained modes due to it large size. Although, Transfer Learning may be in its initial stages, in the coming years it will be one of the most used method to train large datasets with more efficiency and accuracy.

Author Bio:

Pavan Vadapalli, Director of Engineering @ upGrad, an ed-tech platform in India which provides data science, machine learning courses. Motivated to leverage technology to solve problems. Seasoned leader for startups and fast moving orgs. Working on solving problems of scale and long term technology strategy.

Source: https://www.analyticsinsight.net/transfer-learning-in-deep-learning/