AI is a mega-theme that can create significant value

across business sectors. It

could contribute up to USD 15.7 trillion to the global economy in 2030 – USD

6.6 trillion from increased productivity and USD 9.1 trillion from consumption

effects.

While the technology holds tremendous potential, it has a

problem with impartiality. If not tackled, this could continue to hinder

efforts to enhance diversity, equity and inclusion.

At its core, AI algorithms train on specific datasets and

find solutions for real-world problems. As society and organisations

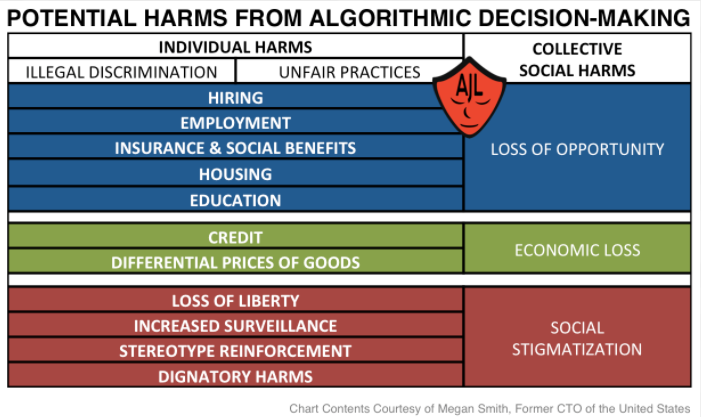

increasingly adopt algorithmic decision-making, we must be cognizant of the harm

that could arise from algorithmic bias.

Exhibit 1: Automated decision-making using sensitive data such as information on race, gender or familial status can affect individuals’ eligibility for housing, employment, or other core services – the table lists the various spheres of life where automated decision-making can cause injury and notes whether each sort of harmful effect is illegal or unfair

Source: www.gendershades.org

Why do bias related issues related surface with AI?

There are several instances of AI-powered systems acting in

a discriminatory manner. In one of the most viral examples, a

prominent credit card issuer set a woman’s credit limit 20x lower than that of

her husband, even though she had better credit scores and a similar financial

history.

This brings up a key issue: although the central goal of

an optimisation algorithm is not to solve for societal factors, it is critical

to understand how the algorithm makes decisions, the input factors it uses and

their impact on the final outcome. Serendipitous discovery can take you only so

far.

The data sets used to train an AI algorithm influence the

efficacy of decision-making. Facial recognition technology, for example, has

been found to do more poorly on darker-skinned individuals as image data used

for training is skewed towards lighter-skinned individuals.

The results from the gender shades project,

which evaluates the accuracy of gender-based products using AI for computer

vision, show that facial recognition accuracy is at its worst for dark-skinned

females.

What steps are regulators taking to make AI-based algorithms fairer?

The concept of fairness itself is rooted in societal norms,

and the trade-offs that people are willing to accept depend on values espoused

in the society. In the most recent example, contact tracing, which would have

been considered a major privacy violation in the pre-pandemic era, is now

widely accepted as the norm given the societal health benefits. Thus, the

context is critical when considering issues around gender, ethnicity, age,

privacy, etc.

That said, it is essential to have a framework of expected

standards when developing AI algorithms and regulatory bodies have started to

weigh in on the issue.

The Algorithmic

Accountability Act in the US requires periodic assessments of high-risk

systems that involve personal information or make automated decisions such as

systems that use AI or machine learning. High-risk systems are those that may

contribute to inaccuracy, bias or discrimination or facilitate decision-making

about sensitive aspects of consumers’ lives by evaluating consumer behaviour.

In the EU, the Digital

Services Act includes provisions for an ethics framework for AI as well as

a future-oriented civil liability framework to help adjudicate AI related

issues.

What steps can developers take to eliminate bias in AI-based algorithms?

Given the known issues with AI-based algorithms, developers

need to think through the project intent, the impact of system design and

possible limitations linked to data availability.

The intent of any new project must

be a key consideration. A thorough assessment may provide insights into the

unintended consequences and basic human ethical values that could be impacted.

For example, in a

study published in 2018, algorithms were trained to distinguish the faces

of Uyghur people, a predominantly Muslim minority ethnic group in China, from

those of Korean and Tibetan ethnicity. This raised concerns in the scientific

community as such studies could be used to train surveillance algorithms.

When evaluating the design and output of AI algorithms,

developers must assess correlation –

the movement of one variable with the other – and causation (cause and effect). The image below depicts how

correlation could imply inaccurate linkages.

For AI algorithms, it is critical to identify the

variables affecting the outcome and any relationship between them to ensure

that biases is not encoded into the decision tree.

Exhibit 2: Explaining correlation and causation

Source: http://sundaskhalid.medium.com

Finally, the output of any AI algorithm is as good as the

input data set. In many cases, good data sets are limited outside

of the majority sample. Several data

de-biasing techniques exist today and many show promising results in

reducing bias. However, we must understand the limitations of any technique

based on information we have today, and must continuously test and monitor the

output samples.

Conclusion

In summary, AI has the potential to provide innovative ways to enable progress on environmental and social issues as well as human welfare. We need, however, to take a step back before we build a system that mirrors the diversity and inclusion issues encountered in the real world. We should be using the latent power of AI to aim for better than the status quo.

Also read:

More on sustainability

Any views expressed here are those of the author as of the date of publication, are based on available information, and are subject to change without notice. Individual portfolio management teams may hold different views and may take different investment decisions for different clients. The views expressed in this podcast do not in any way constitute investment advice.

The value of investments and the income they generate may go down as well as up and it is possible that investors will not recover their initial outlay. Past performance is no guarantee for future returns.

Investing in emerging markets, or specialised or restricted sectors is likely to be subject to a higher-than-average volatility due to a high degree of concentration, greater uncertainty because less information is available, there is less liquidity or due to greater sensitivity to changes in market conditions (social, political and economic conditions).

Some emerging markets offer less security than the majority of international developed markets. For this reason, services for portfolio transactions, liquidation and conservation and conservation on behalf of funds invested in emerging markets may carry greater risk.

[1] Also read Focusing on the ‘S’ in ESG – How disclosure and action can aid diversity on Investors’ Corner

Source: https://investors-corner.bnpparibas-am.com/investing/addressing-bias-in-artificial-intelligence/