Our last AI post on this blog, the New (if Decidedly Not ‘Final’) Frontier of

Artificial Intelligence Regulation, touched on both the Federal

Trade Commission’s (FTC) April 19, 2021, AI guidance and the European

Commission’s proposed AI Regulation. The FTC’s 2021

guidance referenced, in large part, the FTC’s April 2020 post

“Using

Artificial Intelligence and Algorithms.” The recent FTC

guidance also relied on older FTC work on AI, including a January

2016 report, “Big Data: A Tool for Inclusion or

Exclusion?,” which in turn followed a September 15, 2014,

workshop on the same topic. The Big Data workshop addressed data

modeling, data mining and analytics, and gave us a prospective look

at what would become an FTC strategy on AI.

The FTC’s guidance begins with the data, and the 2016

guidance on big data and subsequent AI development addresses this

most directly. The 2020 guidance then highlights important

principles such as transparency, explain-ability, fairness,

accuracy and accountability for organizations to consider. And the

2021 guidance elaborates on how consent, or opt-in, mechanisms work

when an organization is gathering the data used for model

development.

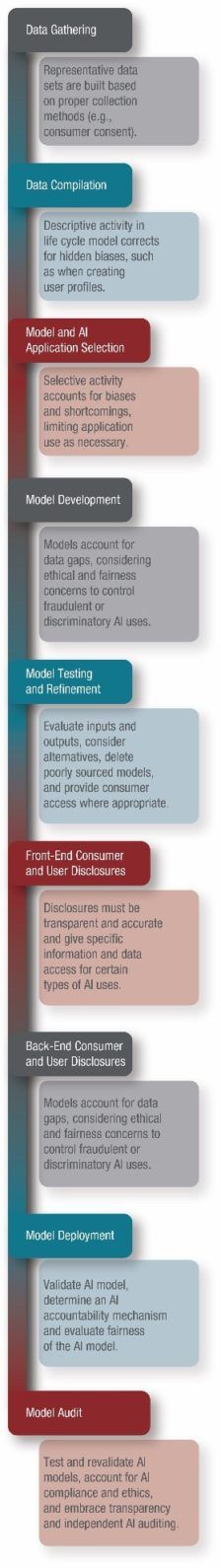

Taken together, the three sets of FTC guidance – the 2021, 2020,

and 2016 guidance ? provide insight into the FTC’s approach to

organizational use of AI, which spans a vast portion of the data

life cycle, including the creation, refinement, use and back-end

auditing of AI. As a whole, the various pieces of FTC guidance also

provide a multistep process for what the FTC appears to view as

responsible AI use. In this post, we summarize our takeaways from

the FTC’s AI guidance across the data life cycle to provide a

practical approach to responsible AI deployment.

Data Gathering

– Evaluation of a data set should assess the quality of

the data (including accuracy, completeness and representativeness)

? and if the data set is missing certain population data, the

organization must take appropriate steps to address and remedy that

issue (2016).

– An organization must honor promises made to consumers

and provide consumers with substantive information about the

organization’s data practices when gathering information for AI

purposes (2016). Any related opt-in mechanisms for such data

gathering must operate as disclosed to consumers (2021).

Data Compilation

– An organization should recognize the data compilation

step as a “descriptive activity,” which the FTC defines

as a process aimed at uncovering and summarizing “patterns or

features that exist in data sets” – a reference to data mining scholarship (2016) (note that the

FTC’s referenced materials originally at mmds.org are now

redirected).

– Compilation efforts should be organized around a life

cycle model that provides for compilation and consolidation before

moving on to data mining, analytics and use (2016).

– An organization must recognize that there may be

uncorrected biases in underlying consumer data that will surface in

a compilation; therefore, an organization should review data sets

to ensure hidden biases are not creating unintended discriminatory

impacts (2016).

– An organization should maintain reasonable security over

consumer data (2016).

– If data are collected from individuals in a deceitful or

otherwise inappropriate manner, the organization may need to delete

the data (2021).

Model and AI Application Selection

– An organization should recognize the model and AI

application selection step as a predictive activity, where an

organization is using “statistical models to generate new

data” – a reference to predictive analytics scholarship (2016).

– An organization must determine if a proposed data model

or application properly accounts for biases (2016). Where there are

shortcomings in the data model, the model’s use must be

accordingly limited (2021).

– Organizations that build AI models may “not sell

their big data analytics products to customers if they know or have

reason to know that those customers will use the products for

fraudulent or discriminatory purposes.” An organization must,

therefore, evaluate potential limitations on the provision or use

of AI applications to ensure there is a “permissible

purpose” for the use of the application (2016).

– Finally, as a general rule, the FTC asserts that under

the FTC Act, a practice is patently unfair if it causes more harm

than good (2021).

Model Development

– Organizations must design models to account for data

gaps (2021).

– Organizations must consider whether their reliance on

particular AI models raises ethical or fairness concerns

(2016).

– Organizations must consider the end uses of the models

and cannot create, market or sell “insights” used for

fraudulent or discriminatory purposes (2016).

Model Testing and Refinement

– Organizations must test the algorithm before use (2021).

This testing should include an evaluation of AI outcomes

(2020).

– Organizations must consider prediction accuracy when

using “big data” (2016).

– Model evaluation must focus on both inputs

and AI models may not discriminate against a

protected class (2020).

– Input evaluation should

include considerations of ethnically based factors or proxies for

such factors.

– Outcome evaluation is

critical for all models, including facially neutral models.

– Model evaluation should consider alternative models, as

the FTC can challenge models if a less discriminatory alternative

would achieve the same results (2020).

– If data are collected from individuals in a deceptive,

unfair, or illegal manner, deletion of any AI models or algorithms

developed from the data may also be required (2021).

Front-End Consumer and User Disclosures

– Organizations must be transparent and not mislead

consumers “about the nature of the interaction” ? and not

utilize fake “engager profiles” as part of their AI

services (2020).

– Organizations cannot exaggerate an AI model’s

efficacy or misinform consumers about whether AI results are fair

or unbiased. According to the FTC, deceptive AI statements are

actionable (2021).

– If algorithms are used to assign scores to consumers, an

organization must disclose key factors that affect the score,

rank-ordered according to importance (2020).

– Organizations providing certain types of reports through

AI services must also provide notices to the users of such reports

(2016).

– Organizations building AI models based on consumer data

must, at least in some circumstances, allow consumers access to the

information supporting the AI models (2016).

Back-End Consumer and User Disclosures

– Automated decisions based on third-party data may

require the organization using the third-party data to provide the

consumer with an “adverse action” notice (for example, if

under the Fair Credit Reporting Act 15 U.S.C. § 1681

(Rev. Sept. 2018), such decisions deny an applicant an

apartment or charge them a higher rent) (2020).

– General “you don’t

meet our criteria” disclosures are not sufficient. The FTC

expects end users to know what specific data are

used in the AI model and how the data are used by

the AI model to make a decision (2020).

– Organizations that change specific terms of deals based

on automated systems must disclose the changes and reasoning to

consumers (2020).

– Organizations should provide consumers with an

opportunity to amend or supplement information used to make

decisions about them (2020) and allow consumers to correct errors

or inaccuracies in their personal information (2016).

Model Deployment

– When deploying models, organizations must confirm that

the AI models have been validated to ensure they work as intended

and do not illegally discriminate (2020).

– Organizations must carefully evaluate and select an

appropriate AI accountability mechanism, transparency framework

and/or independent standard, and implement as applicable

(2020).

– An organization should determine the fairness of an AI

model by examining whether the particular model causes, or is

likely to cause, substantial harm to consumers that is not

reasonably avoidable and not outweighed by countervailing benefits

(2021).

Model Audit

– Organizations must test AI models periodically to

revalidate that they function as intended (2020) and to ensure a

lack of discriminatory effects (2021).

– Organizations must account for compliance, ethics,

fairness and equality when using AI models, taking into account

four key questions (2016; 2020):

– How representative is the

data set?– Does the AI model account for biases?– How accurate are the AI predictions?– Does the reliance on the data set raise ethical or fairness

concerns?

– Organizations must embrace transparency and

independence, which can be achieved in part through the following

(2021):

– Using independent,

third-party audit processes and auditors, which are immune to the

intent of the AI model.– Ensuring data sets and AI source code are open to external

inspection.– Applying appropriate recognized AI transparency frameworks,

accountability mechanisms and independent standards.– Publishing the results of third-party AI audits.

– Organizations remain accountable throughout the AI data

life cycle under the FTC’s recommendations for AI transparency

and independence (2021).

The content of this article is intended to provide a general

guide to the subject matter. Specialist advice should be sought

about your specific circumstances.

Source: https://www.mondaq.com/unitedstates/new-technology/1072608/the-not-so-hidden-ftc-guidance-on-organizational-use-of-artificial-intelligence-ai-from-data-gathering-through-model-audits