Last March, Iowa State University mathematicians Leslie Hogben and Carolyn Reinhart received a welcome surprise. Adam Wagner, a postdoctoral fellow at Tel Aviv University, emailed to let them know he’d answered a question they’d published the week before — though not by any of the usual math or brute-force computing techniques. Instead, he used a game-playing machine.

“I was very happy to have the question answered. I was excited that Adam had done it with AI,” said Hogben.

Hogben and Reinhart’s problem was one of four that Wagner solved using artificial intelligence. And while AI has contributed to mathematics before, Wagner’s use of it was unconventional: He turned the hunt for solutions to Hogben and Reinhart’s question into a kind of contest, using an approach other researchers have applied with great success to popular strategy games like chess.

“I just saw all these articles about these companies like DeepMind, for example, that have created these programs that can play chess, Go, and Atari games at really superhuman levels,” Wagner said. “I thought, how nice would it be if you could somehow use these self-learning algorithms, these reinforcement learning algorithms, and find a way to use them in mathematics as well?”

Wagner began trying to use a similar strategy to produce counterexamples — examples that contradict (or “counter”) a mathematical hypothesis and thereby prove it false. He reimagined the search for counterexamples as a guessing game, then tried his program out on dozens of open problems in mathematics.

“I really think this is very beautiful work,” said Geordie Williamson, a professor at the University of Sydney who has also combined machine learning with mathematical research.

Machine learning programs “teach” computers specific capabilities. Reinforcement learning models — the type used by both Wagner and DeepMind — take a hands-off approach to that instruction, letting the computer practice a task (such as a game) repeatedly. The model intervenes only to assess the computer’s work. In response, the computer adjusts its strategy as it learns which approaches lead to better scores.

Reinforcement learning has proved to be a powerful way to train models on complicated strategy games. Wagner’s vision for adapting it to research mathematics was surprisingly simple.

To get a sense for how reinforcement learning can be used to uncover counterexamples, consider this scenario. Suppose there is a mathematical conjecture predicting that the expression 2x – x2 is negative for any real value of x. This conjecture isn’t true — and you can prove that by producing a value of x (a counterexample) for which it’s false. (Any number between 0 and 2 is a counterexample, with the value of 2x – x2 peaking at x = 1.)

To do that using reinforcement learning, Wagner might let his model loose on a game that consists of guessing a real number x. After playing, the model would receive its score: the value of 2x – x2. Initially, with no idea what numbers maximize the score, the model would guess wildly. But once the model played enough times, a pattern would become apparent: The closer x is to 1, the higher the score. By following this pattern, the model would inevitably stumble upon a counterexample once it guesses a number between 0 and 2.

Wagner applied the same basic scheme to dozens of problems, varying only the score and the types of moves the computer was allowed to make. All of the problems were from discrete math, which deals with objects that are separate and distinct — think whole numbers, rather than the continuum of the number line.

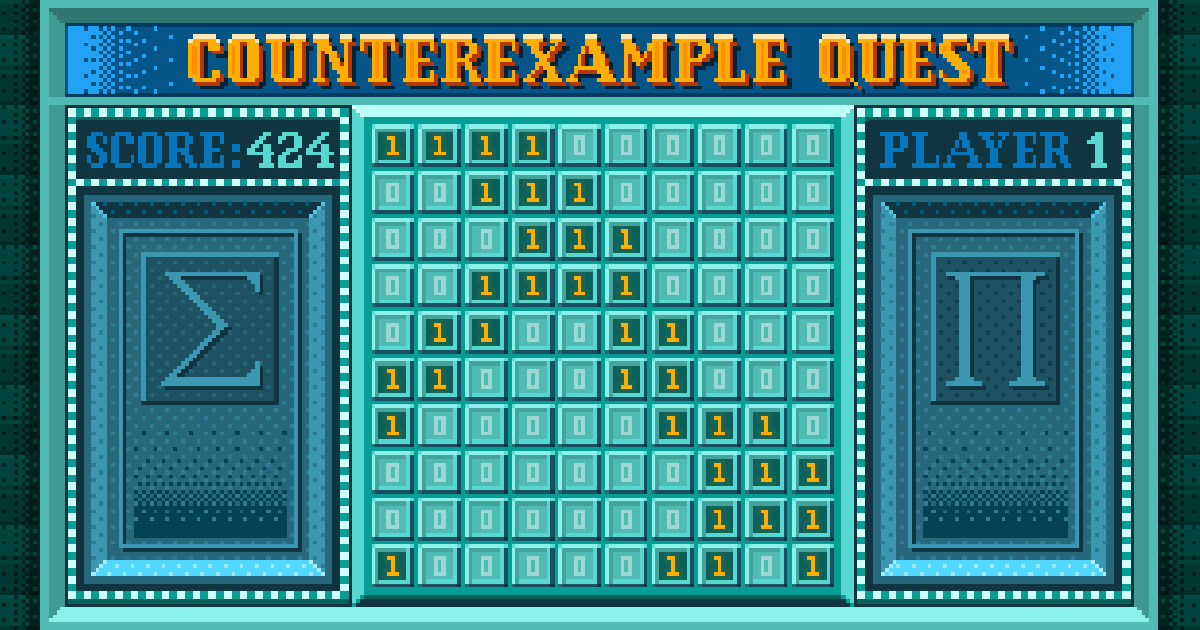

The discrete nature of the problems made it easier for Wagner to build a model. For example, one problem posed by Richard Brualdi and Lei Cao in 2020 was about tables of numbers (called matrices) whose entries are all either 0 or 1. A computer can create such a matrix by cycling through each available spot and selecting either 0 or 1.

“All of these games are just a finite sequence of finite decisions,” said Wagner. (Allowing for games with infinitely many steps would have introduced new complications.)

Brualdi and Cao’s problem concerned a particular set of 0-1 matrices that they called 312-pattern avoiding, in reference to the 3 x 3, “312 matrix,” which represents mixing up the entries of a three-dimensional vector so that (a,b,c) becomes (c,a,b). A 0-1 matrix is 312-pattern avoiding if there’s no way to delete some of its rows and columns and end up with the 312 matrix.

More specifically, Brualdi and Cao’s question was about an attribute of the matrix called its “permanent,” a number obtained by a complicated formula that involves adding and multiplying all the matrix entries. They wanted to know which 312-pattern-avoiding matrices had the largest permanent and how big that permanent could possibly get, formulating guesses for square matrices of any size.

To answer their question, Wagner devised a game for his model: guess a 0-1 matrix. Entry by entry, it chose either 0 or 1. The larger the permanent, the higher the model’s score, with points deducted for not avoiding the 312 matrix. The model found examples that beat Brualdi and Cao’s guesses once the matrices were 4 x 4 or larger.

The new work is an exciting proof of concept, though its actual contributions to mathematics are modest so far.

“None of [the questions the model solved] were super-important conjectures,” said Wagner.

And computers still can’t match the prowess of a human brain in many ways that are important to math research. In trying to disprove one of the conjectures for the new paper, Wagner’s model hit a wall. It had too little computing power to find a counterexample on its own. Despite that, it generated a trail of guesses that enabled Wagner to easily find one himself.

“Just looking at the best thing it constructed, if you take it to any mathematician, and it doesn’t have to be a graph theorist, it’s completely obvious what you should be trying,” said Wagner.

Even for Brualdi and Cao’s example, the model needed a little help once the matrices got too big.

It will be a long time, if ever, before mathematicians cede their field to machines. Meanwhile, those who want to capitalize on AI will need to keep their eyes open for opportunities to incorporate it into research. That’s how other new technologies, such as electricity, ultimately revealed their potential, said Williamson, and he sees no reason AI should be different.

“We didn’t find a problem and say, ‘We must use electricity to solve this problem.’ We much more said, ‘What are the simple little things we can do?’”

Source: https://www.quantamagazine.org/in-new-math-proofs-artificial-intelligence-plays-to-win-20220307/